'Technology trust is a good thing, but control is a better one.' - Stephane Nappo

For the people who don't want to read the whole article: You can find the Terraform module on GitHub.

With the continuously expanding reliance on computer systems and the commodity that they provide, everyone down to the single person must employ some sort of cybersecurity tool, method, or measure. Without cybersecurity, we are actively jeopardizing the information that has been given to us by our customers as well as their reputation. The problem becomes more imminent if we, as an example, are operating in the medical sector. Then, human lives would be at stake.

In this blog post, I'll be writing about processes and tools that enable Cobalt to govern and improve our security posture, effectively protecting the valuable data and the wellbeing of our customers.

First, we need to cover some basics.

Google Cloud Platform (GCP)

Infrastructure as a service (IaaS)

GCP is the preferred cloud provider for Cobalt.

It operates as a public cloud vendor and offers resources housed in its data centers around the globe, for free, or on a pay-per-use basis. GCP uses the same infrastructure internally for its end-user products. This procedure is also known as "Eating your own dog food." As with other cloud vendors, GCP's API is mature and well supported. Naturally, using a tool to manage its resources is more or less a default, or at least it should be. The alternative would be the dreaded "click-ops."

Sumo Logic (Sumo)

Security Information and Event Management (SIEM)

Sumo is a cloud-based machine data analytics company focused on security, operations, and business intelligence. In simpler terms, it's a SaaS product that collects events, metrics, and logs from various data sources, and has them ready for analyzing, alerting, pre/post-processing, and forwarding.

Terraform

Infrastructure As Code (IaC)

IaC is one of the latest iterations of continuous improvement for the application deployment process and the surrounding ecosystem. Terraform is the de-facto IaC tool that enables us to manage and control our resources across various products by using a common configuration syntax dubbed Hashicorp Configuration Language (HCL). With its "Create Read Update Delete" (CRUD) operation cycle and ease of use, we gain control of, improve, and make our infrastructure secure, standardized, and stable. As an example, the infrastructure configuration and its resources are "codified" and checked in a Version Control System (VCS). That enables us to collaborate and compare the code against specific policies and standards.

Modularity

One of the better features of Terraform is modules.

A module is a container for multiple resources that are used together.

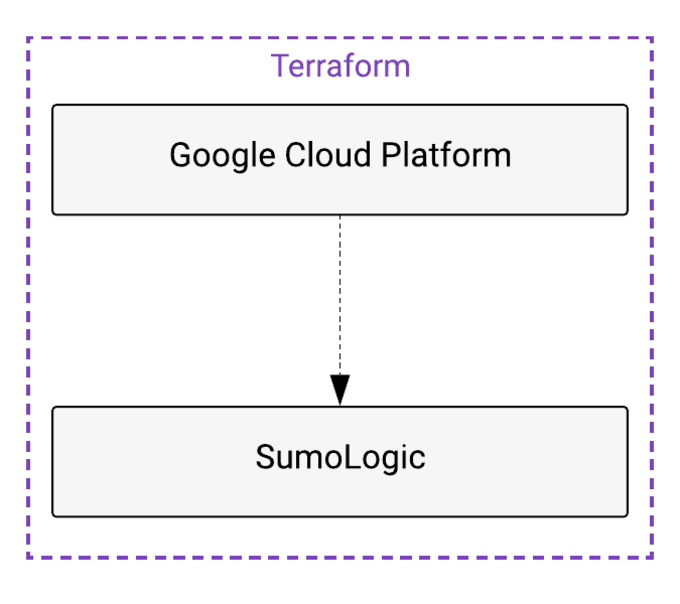

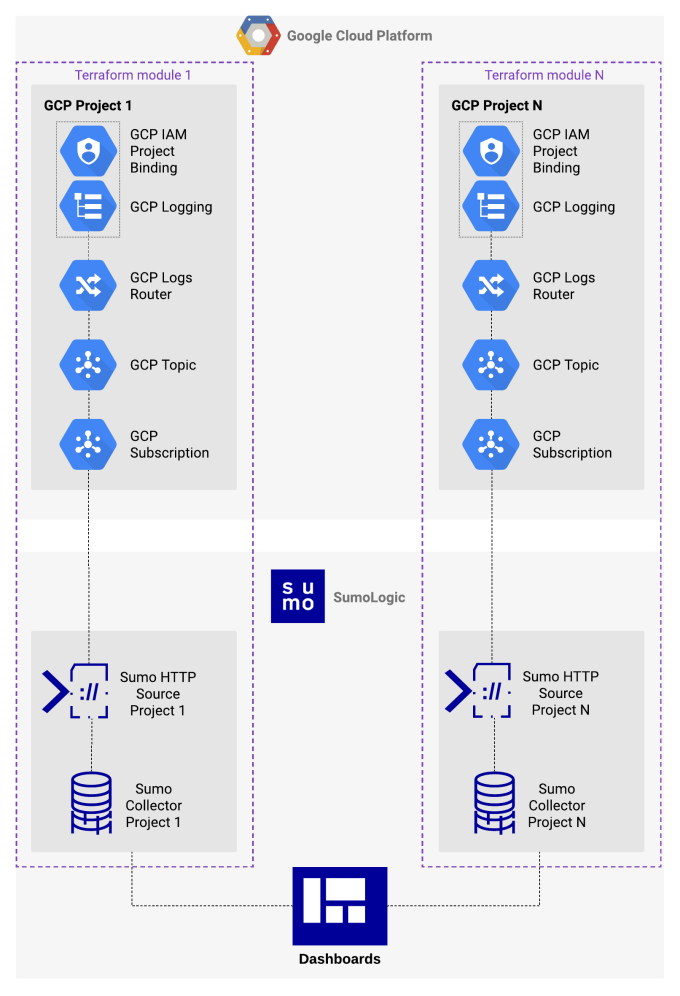

Terraform modules are reusable, versionable, testable, and callable from within other modules. They help with repetitive parts of an infrastructure setup, thus making the code flexible, interchangeable, and modular. See Figures 1.1 and 1.2.

locals {

project = "some-project-name"

name = "sumologic"

}

module "gcp_sumologic" {

source = "SumoLogic/sumo-logic-integrations/sumologic//gcp/cloudlogging/"

version = "v1.0.7"

name = local.name

gcp_project = local.project

}

Figure 1.1. Simple example of a Terraform Module in HCL.

IaC IaaS and SIEM

Figure 2. Terraform Module For Google Cloud Platform and Sumo Logic.

Figure 2. Terraform Module For Google Cloud Platform and Sumo Logic.

At Cobalt, we are conscious of cybersecurity. Our Pentest as a Service (PtaaS) model heavily relies on it, so we treat it as a "First Class Citizen." To achieve the desired level of security, we use most of the existing tools at our disposal. The current process is sending all of the events to our SIEM. See Figure 2. It allows our security team to analyze and respond to threats almost in real time and act upon predefined patterns and behaviors. We also store those events for future audits.

For this purpose, we have developed and published a Terraform Module. When it is applied, it enables the events to flow from our IaaS to our SIEM. Unsurprisingly, we are using the module in production.

To showcase the full capability of our Terraform Module, we would need to get a bit technical. We won't cover the Terraform basics here and have to assume that:

- Terraform (>= v1.0.11) is installed.

- Credentials for GCP and Sumo are configured properly.

- A GCP project is up and running.

Alerting and responding to potential threats is out of the scope of this blog post. We'll focus on the setup that enables our Security Teams to execute their job faster and more efficiently.

The Example

Using the module is as simple as copying, pasting, and applying this Terraform configuration template:

A temporary GCP project with the id "elevating-security-1665822506" has been created beforehand.

locals {

project = "elevating-security-1665822506"

name = "elevating-security"

}

module "gcp_sumologic" {

source = "SumoLogic/sumo-logic-integrations/sumologic//gcp/cloudlogging/"

version = "v1.0.7"

name = local.name

gcp_project = local.project

}

Terraform Init And Terraform Apply

terraform init downloads all the necessary providers and modules and initializes the backend.

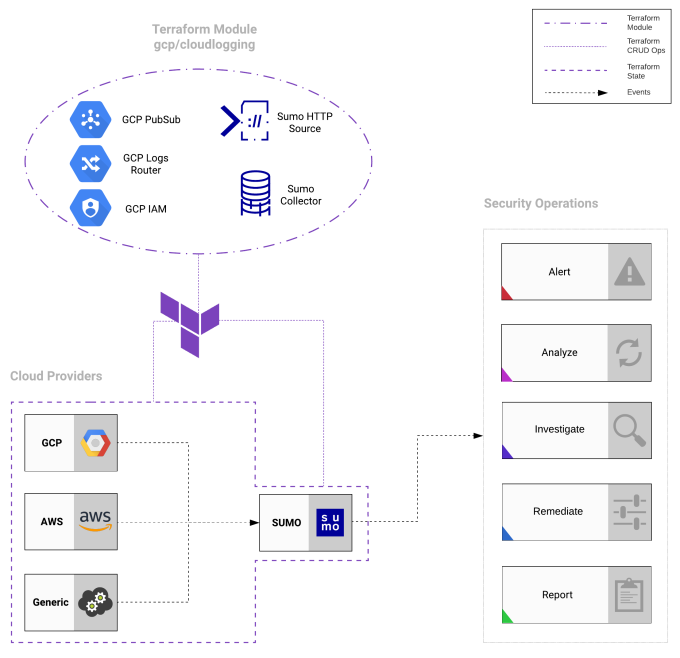

terraform apply creates the whole system, composed of the following resources. See Figure 3.

- GCP Logging Project Sink

- GCP IAM Binding

- GCP PubSub Topic

- GCP PubSub Subscription

- Sumo GCP Source

- Sumo Collector

Figure 3. A Terraform module in detail.

Figure 3. A Terraform module in detail.

How It All Works Together

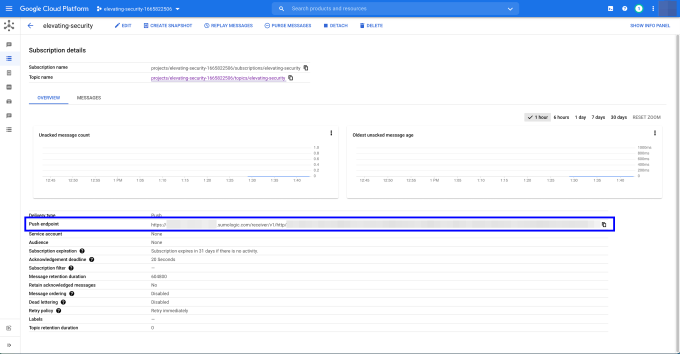

If we look inside the module, the sumologic_gcp_source resource creates an HTTPS endpoint. That endpoint is set as the value of the push_endpoint argument in the google_pubsub_subscription resource. See Figure 4.1. That would be the "event receiver" that is residing in Sumo.

resource "google_pubsub_subscription" "sumologic" {

project = var.gcp_project

name = var.name

topic = google_pubsub_topic.sumologic.name

ack_deadline_seconds = 20

push_config {

push_endpoint = sumologic_gcp_source.this.url # Sumo GCP Source URL - Event Receiver

}

}

Figure 4.1. google_pubsub_subscription resource in Terraform.

In the GCP project, we can see (although it is heavily redacted) that the sumologic_gcp_source.this.url is set in the subscription as the "Push endpoint." See figure 4.2

Figure 4.2. GCP PubSub subscription with Sumo push endpoint.

Figure 4.2. GCP PubSub subscription with Sumo push endpoint.

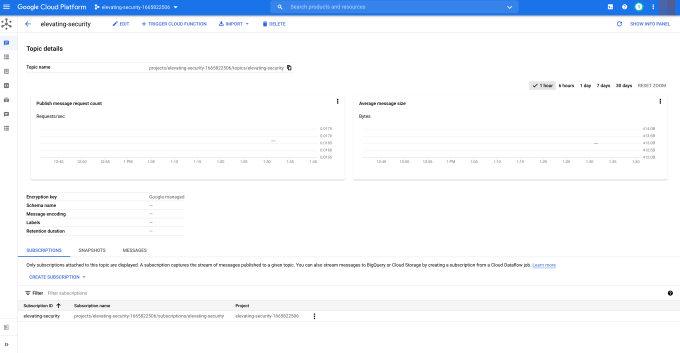

The subscription is attached to a topic and forwards all the events to its subscribers. The events are the logs from the Logging Service that come via the Logging Sink. See Figure 5.1.

Figure 5.1 A PubSub topic in GCP.

Figure 5.1 A PubSub topic in GCP.

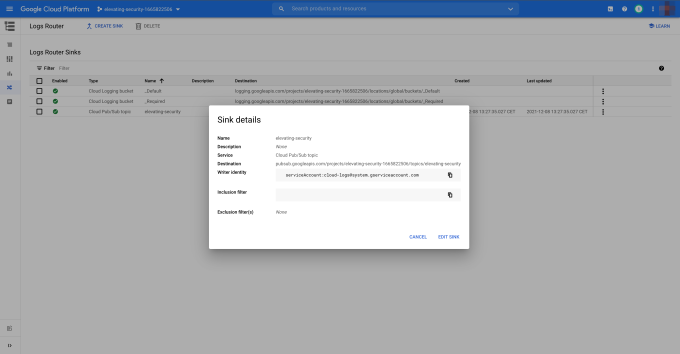

Going further, we see that the Logging Sink has the elevating-security PubSub topic as the destination. See Figure 5.2.

Figure 5.2 A Logging sink in GCP with a topic set as a destination.

Figure 5.2 A Logging sink in GCP with a topic set as a destination.

The setup enables us to forward all of the events in a GCP project to Sumo. We should be mindful that forwarding all might not be the best approach. It is often overlooked when sending events from the VPC service. They contain a lot of information that can potentially "eat up" the subscription plan at the receiver's platform if the SIEM subscription model is volume-based.

For such use cases, our Terraform module is as versatile as possible. It accepts a logging sink filter variable with which we can set logging filters and omit logs that are not relevant to our use case.

The Terraform module can be further customized via the input variables. Head on to the README.md for more information.

A good filter example would be to omit the logs emitted by the gce_subnetwork from the VPC Flow Logs. See Figure 6.

locals {

project = "elevating-security-1665822506"

name = "elevating-security"

}

module "gcp_sumologic" {

source = "SumoLogic/sumo-logic-integrations/sumologic//gcp/cloudlogging/"

version = "v1.0.7"

name = local.name

gcp_project = local.project

logging_sink_filter = "resource.type!=\"gce_subnetwork\"" # GCP Project Logging Sink Filter

}

Figure 6. Passing the logging_sink_filter variable to the module.

Making Sure It Works

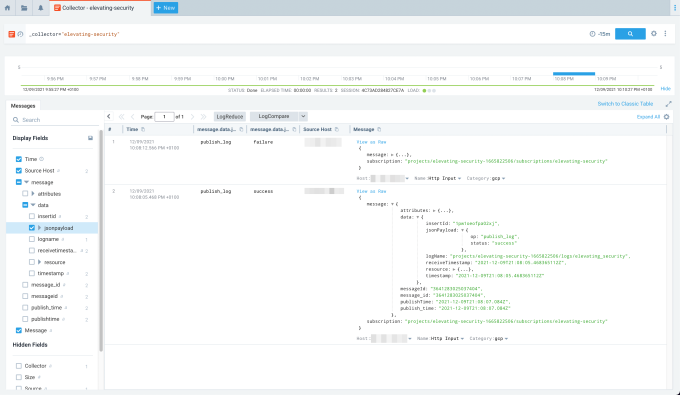

For active GCP projects, we would instantly see events appearing in Sumo. Otherwise, we can simulate an event. Let's write a log entry with the gcloud command-line tool and see if it emerges in Sumo. See Figures 7.1 and 7.2. Note that there might be a slight delay before the log appears in Sumo.

$ gcloud logging write \

elevating_security \

'{"op": "publish_log", "status":"success"}' \

--payload-type=json

--project=elevating-security-1665822506

Created log entry.

$ gcloud logging write \

elevating_security \

'{"op": "publish_log", "status":"failure"}' \

--payload-type=json

--project=elevating-security-1665822506

Figure 7.1. Using the gcloud command-line tool to send a JSON payload.

We can see that the dummy log that we pushed from the command line is in Sumo.

Log Filters

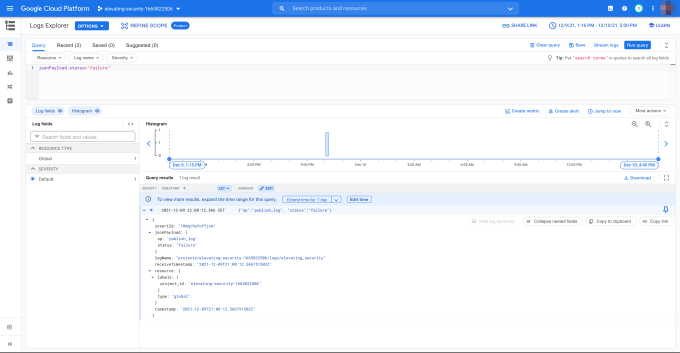

Let us assume that we want to see only the logs that have "status: failure." Let's also assume that we have no clue how to use GCP's query language. We need to construct the filter and verify that it is working as intended. Using GCP's query-builder in the GCP Logs Explorer shows if the filter itself is correct and the filtered logs on the screen. See Figure 8.1. When we are satisfied with the result, we can copy and paste the filter string in the input variable of the child module, and run terraform apply.

Figure 8.1. Using the query-builder in GCP Logs Explorer.

Figure 8.1. Using the query-builder in GCP Logs Explorer.

Another approach would be to use the gcloud command-line tool to read and filter logs in the terminal. See Figure 8.2

$ gcloud logging read 'jsonPayload.status="failure"' --limit 10 --format json --project=elevating-security-1665822506

[

{

"insertId": "qnezvufhscsdr",

"jsonPayload": {

"op": "publish_log",

"status": "failure"

},

"logName": "projects/elevating-security-1665822506/logs/elevating_security",

"receiveTimestamp": "2021-12-09T11:27:23.194853267Z",

"resource": {

"labels": {

"project_id": "elevating-security-1665822506"

},

"type": "global"

},

"timestamp": "2021-12-09T11:27:23.194853267Z"

}

]

Figure 8.2. Reading a log from the GCP log with a filter 'jsonPayload.status="failure"' from the command line.

From the point of view of capabilities and scope, this is just scratching the surface. To keep this blog post as focused as possible and to allow some space for future blog posts, I have to cut it short here.

Hopefully, you find this blog post useful and insightful on how we deploy and maintain a secure infrastructure using IaC, IaaS, and SIEM workflows.

For any suggestions and proposals, feel free to send me an email at nikola@cobalt.io.